AI is being used to automate just about everything these days, from writing code to making coffee. Observability is no exception. But before we dive into how AI can actually help, it is worth stepping back to look at what already works, what does not, and where the real gaps are.

Telemetry Data

Metrics, logs, traces, events, profiles. Most of us are familiar with these by now. Collecting this kind of data has become relatively easy. You can use OpenTelemetry, eBPF, agents, exporters, or even add manual instrumentation if needed.

If you want to measure something like user signups, API latency, or CPU usage, you can. And once you have the data, you can use it to troubleshoot issues or just enjoy looking at charts that (hopefully) show growth.

At first glance, this all seems well covered. Everything is measurable, so why would we need AI here? But as usual, the hard parts come later.

Storing the Data

There are many tools available to store your telemetry. Some are open source, some are commercial. You can run them in the cloud or on your own infrastructure. Some are affordable, others can get very expensive.

The main challenge here is the volume. Observability data grows quickly and can become hard to manage.

Fortunately, there are well known ways to keep costs under control. You can use sampling, compression, shorter retention periods, or store everything on premise. Most companies find a balance that works for them.

This part is mostly a tradeoff between cost and flexibility. AI does not add much value here, since the problem is less about understanding and more about storage efficiency.

Looking at the Data

Tools like Grafana, Datadog, and New Relic let you create dashboards, explore your data from different angles, and dig into whatever is happening in your system.

We also have standard query languages now, like PromQL, MetricsQL, TraceQL, and LogQL, which make it easier to work with different types of telemetry data in a consistent way.

AI is starting to show up here as well. You can now ask questions like “Why is my error rate spiking?” using plain language. It is impressive, but it only works well if you already have a rough idea of what you are trying to find.

What’s Still Missing

Back when I was building my previous observability product, which focused on cloud infrastructure and database monitoring, the most common question we got in support was something like this:

Can you explain this chart? Is this normal? If not, what is wrong? And how do I fix it?

That stuck with me. People were not looking for more dashboards or charts. What they really wanted were answers, guidance, and clear suggestions on what to do next.

Sure, experienced engineers can often glance at a dashboard and quickly spot what is wrong. But modern systems are becoming more and more complex. There are so many moving parts. Different services, databases, message queues, containers, cloud providers, and more. No one can keep the full picture in their head anymore.

This is where I believe AI can make a real difference.

Not by replacing engineers, but by helping them connect the dots, highlight what stands out, and suggest possible fixes based on real context. The goal is to spend less time staring at graphs and more time actually fixing the problem.

Approaches to AI-Powered Root Cause Analysis

I’ll admit I’m biased here since I’ve been working on this problem for years, but I’ll try to stay objective and just explain the different approaches I’ve seen. I’ll mention Coroot’s method as a reference, but this is more about the overall landscape.

First, how can we tell if one approach works better than another? There’s no clear benchmark yet, no easy way to measure how good an RCA engine is. But in some cases, common sense helps. For example, we all understand that a meter can measure distance but not weight. So if an RCA tool only looks at one type of data, we can usually tell what kinds of problems it will miss.

So let’s classify RCA approaches by the kind of data they use. That gives us a good idea of what root causes they can catch and where they might fall short.

Alert-Based RCA

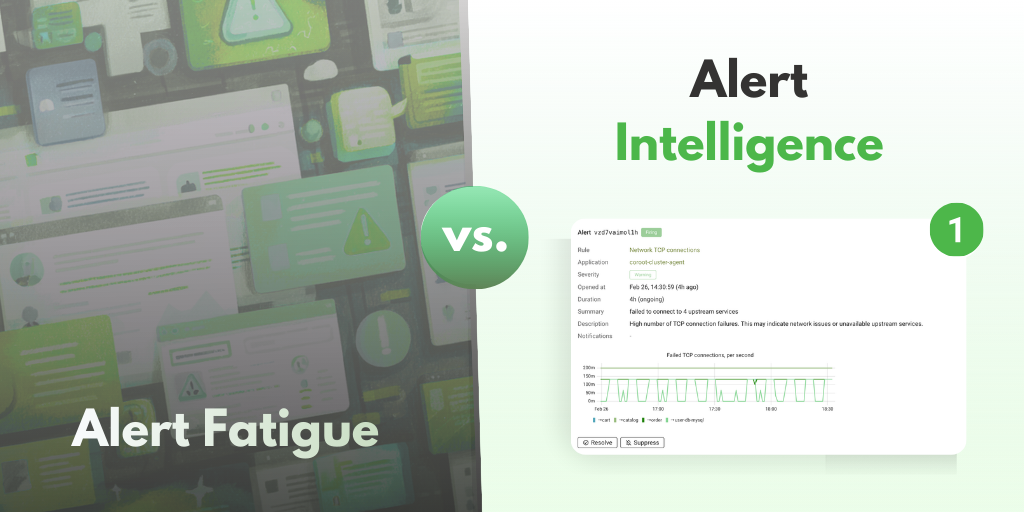

This is the most common approach today. It treats alerts as the main signal. If alerts fire, something must be wrong. If several alerts fire at the same time, the system tries to connect them and figure out what caused what.

Some tools add a bit of intelligence on top, like a language model that can read past incidents or engineer conversations. Others pull in change data from GitHub or CI systems, so the engine knows when a deployment happened. That adds some helpful context. For example, if a service was updated right before the alerts fired, the engine can suggest that as a possible cause.

This method works fairly well if your alerts are well-configured, your incidents are documented, and you track changes across the system. But it also has big limitations.

Here is a simple example: Traffic to your service increases. Your Java app starts allocating memory faster. That triggers more frequent garbage collection. GC pauses add latency. Eventually, users start seeing timeouts.

If your alerts only cover latency or error rates, you will see the symptoms but not the full story. The actual problem started much earlier, with memory allocation pressure and GC activity. But there was no alert for that. To figure it out, you would need to dig through different dashboards and piece everything together.

This is the main weakness of alert-based RCA. It only works with what is already visible. If no alert was set up for a certain signal, the engine simply has no idea. And as systems get more complex, this blind spot becomes a real problem.

Event-Based RCA

The next popular approach is based on events. In this case, events describe what happened in your system. This could be logs, Kubernetes events, deployment records, and so on.

It is similar to the alert-based approach, but it works with slightly lower level data. The nice part is that it does not require much input from the user. You do not need to preconfigure alerts or write rules. The system just watches what is happening and tries to connect the dots.

But you can probably guess the problem with this method. It only works if the explanation is already in the events.

Let’s go back to the same example. Your service gets more traffic. The Java app starts allocating more memory. GC activity goes up. That adds latency. Users start getting timeouts.

If your logs or events do not clearly show that memory pressure and GC behavior changed, the system might not catch it. Or worse, it might give you a misleading answer based on unrelated messages in the logs. This method is still better than nothing, but just like the alert-based approach, it can only see what is already written down.

In other words, if the root cause does not show up in the events, this method will not help much either.

Tracing-Based RCA

There is a popular belief that all you need for troubleshooting is traces or wide events. At first glance, that sounds great. You find an affected user request, analyze its trace with AI, pinpoint the slow or broken service, and just like that, you have your answer.

In theory, it makes a lot of sense. But in practice, traces often fall short.

The main issue is that traces only tell you what happened. They rarely explain why it happened. For example, imagine a span shows that handling a request in your Java app took 700 milliseconds. That is useful. But it does not explain why. If the trace also told you that 500 milliseconds were spent in garbage collection, that would be amazing.

Technically, it is possible to add this kind of detail. But the overhead of capturing all that internal behavior for every span makes it unrealistic for most systems.

There is also another big issue with using traces as the main source of truth. And that is coverage. Based on what I have seen, most companies are far from having full tracing across all their services. Even with OpenTelemetry adoption growing fast, it is still rare to see full end-to-end coverage in production systems.

So while tracing-based RCA has a lot of potential, it often struggles in real-world environments. If a service is not instrumented, or if important internal metrics are missing from the spans, you are left guessing again.

All Available Data Based RCA

Now you might be wondering, why limit ourselves at all? Why not take every piece of telemetry we have — logs, metrics, traces, events — and give it all to an AI model? Let it figure out the root cause for us. It sounds like the perfect solution.

And yes, this is the dream. But in reality, there are a few big challenges.

The first one is limited context. No matter how powerful the model is, there is always a limit to how much data it can handle at once. We cannot simply feed in all our logs, metrics, and traces from the past hour and expect the model to magically find the answer. The context window is real and always a constraint.

The second issue is missing context. Even if we manage to include all the raw data, the model still needs to understand how the system works. It needs to know what each service does, how they are connected, what each metric represents, what is normal, and what is not. Without this understanding, the model is just guessing.

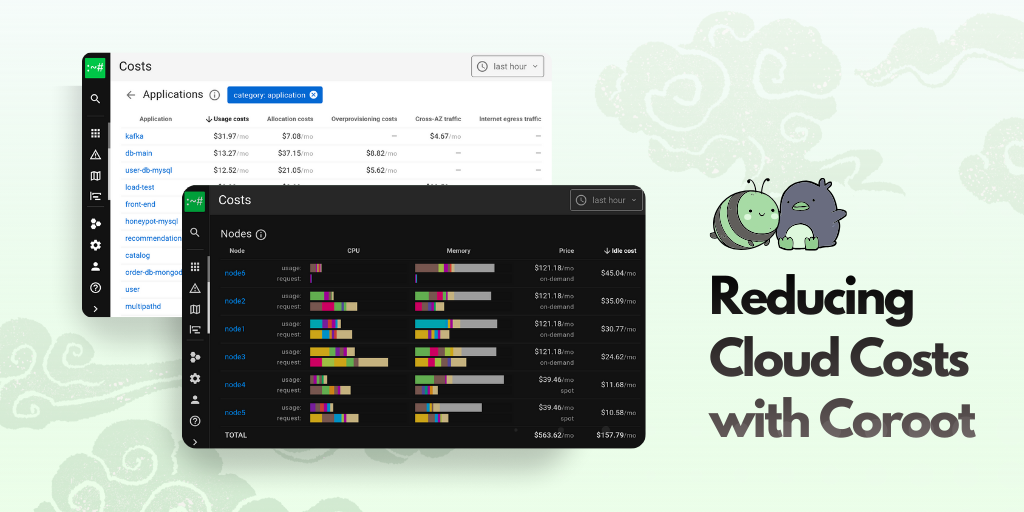

There is also the issue of data coverage. When we started working on Coroot, we did not plan to build our own agent. The idea was to rely on existing telemetry from tools like Datadog and other vendors. But after taking a closer look, we realized that even well instrumented systems were missing important signals. The data was not complete.

We also found that many users are not eager to set things up manually. If collecting the right data requires code changes or extra effort, it often does not get done. That is why we decided to use eBPF to gather low level data directly from the system. This way, we can collect precise and complete telemetry automatically, without requiring users to change their code or configure anything manually.

Despite the complexity, this approach is the most promising when it comes to accuracy. It gives us the best chance of truly understanding what went wrong and why. That is why we chose to go this route at Coroot. We believe that complete, automatic data collection is the key to making root cause analysis actually useful.

Coroot’s Approach

Coroot follows the all data approach, aiming to make AI powered root cause analysis useful within minutes of installation, no matter how the system is set up.

If OpenTelemetry is already in place, existing traces and metrics are used. If not, Coroot automatically collects telemetry using eBPF, including low level metrics, logs, Kubernetes events, and other signals. This works without code changes or manual setup.

To stay within the context limits of large language models, raw telemetry is not passed directly to the model. Instead, a pre analysis step selects only the most relevant findings. These are then used as input.

This makes it possible to fit the full prompt into 10-20k tokens, which is enough to provide clear and focused context for accurate and useful analysis.

Final Thoughts

AI has a lot of potential to make root cause analysis faster, more accurate, and less frustrating. But it is not magic. The real value depends on having the right data, full coverage, and enough context for the system to understand what that data actually means.

Each approach we explored has its pros and cons. Some focus on alerts, others rely on logs or traces. But the most promising results come from using all available data, adding the missing context, and applying AI where it can truly help. Not to replace people, but to help them see the bigger picture and act with confidence.

That is the path we are taking at Coroot. The goal is not just automation for its own sake. It is about giving engineers the insights they need, without the guesswork or busywork. Because in the end, what really matters is finding and fixing problems quickly.