Load Generator Flood Homepage

Failure scenario

This issue happens when the loadgeneratorFloodHomepage flag is turned on in the Flagd config (through a Kubernetes ConfigMap or the Flagd UI).

Once enabled, the load generator starts sending a huge number of requests to the homepage.

Root Cause Analysis by Coroot

How it works

1. Anomaly detection

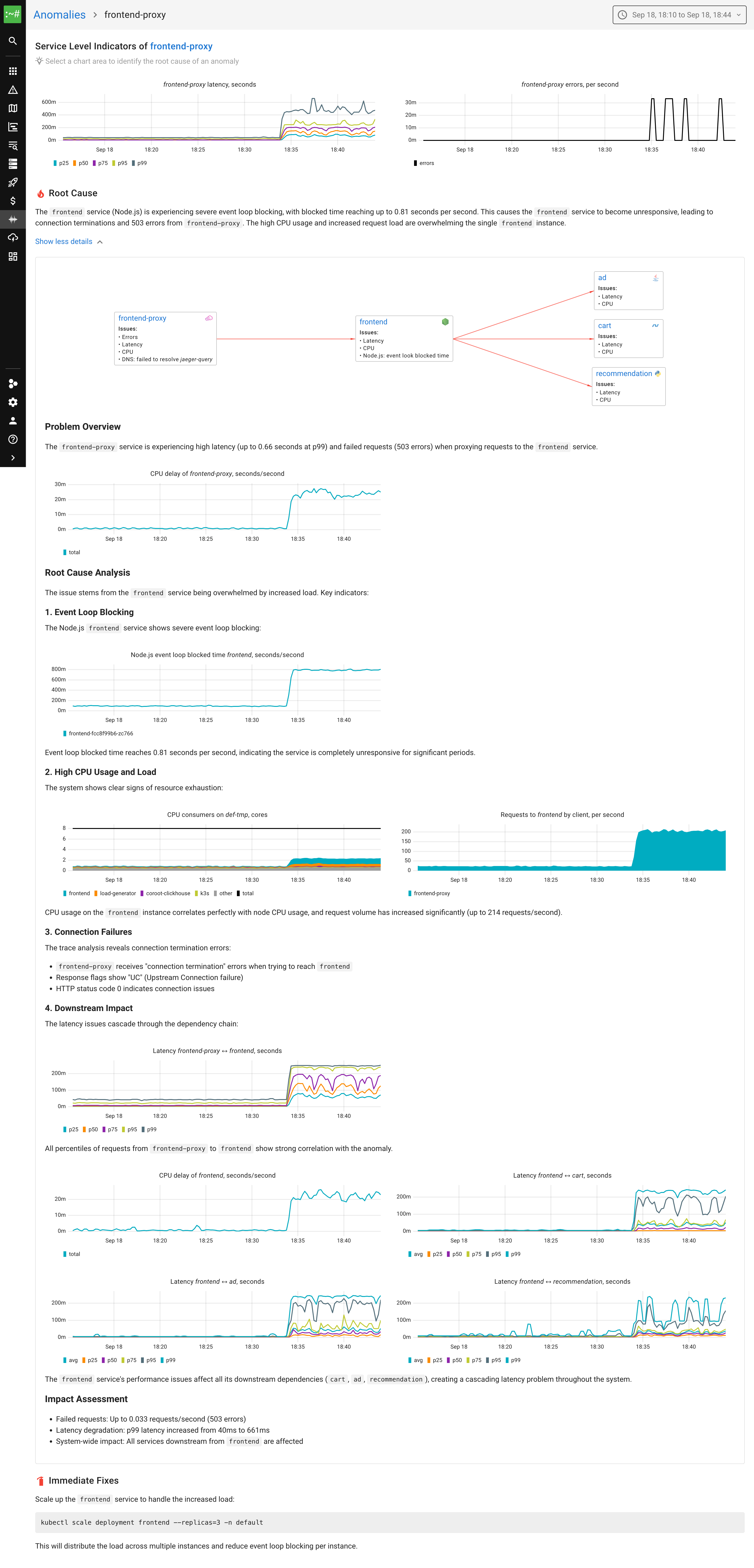

Coroot spotted an issue in the frontend-proxy service, where latency increased and requests started failing.

2. eBPF-based metrics correlation

By analyzing eBPF metrics, Coroot found that all related services were showing higher latency. This linked the slowdown across the whole dependency chain back to a traffic flood hitting the frontend-proxy.

3. Understanding the cause

Coroot revealed that the sudden spike in requests pushed the frontend service to higher CPU usage. While the nodes had enough CPU capacity overall, the real bottleneck was the event loop of the frontend service, which runs on Node.js. Since Node.js processes requests in a single-threaded event loop, it’s bound to a single CPU core. Once traffic spiked, blocking time grew to 800ms per second, causing the latency. The clear fix is to scale the frontend service so that the load is spread across multiple instances and CPU cores.

Results

3/3Successfully identified the frontend service as the bottleneck due to Node.js event loop blocking

Suggested scaling the frontend service to distribute load across multiple instances and CPU cores

Coroot also detected that the problem was due to increased traffic

LLM Usage Details

Based on $3 per 1M input tokens, $15 per 1M output tokens

Try Coroot's AI RCA in your environment

See how Coroot finds root causes for your real production issues with a full enterprise trial.