Recommendation Service Memory Leak

Failure scenario

This performance issue is triggered by enabling the recommendationServiceCacheFailure flag in the Flagd config.

When activated, this flag introduces a memory leak in the recommendation service.

Root Cause Analysis by Coroot

How it works

1. Anomaly detection

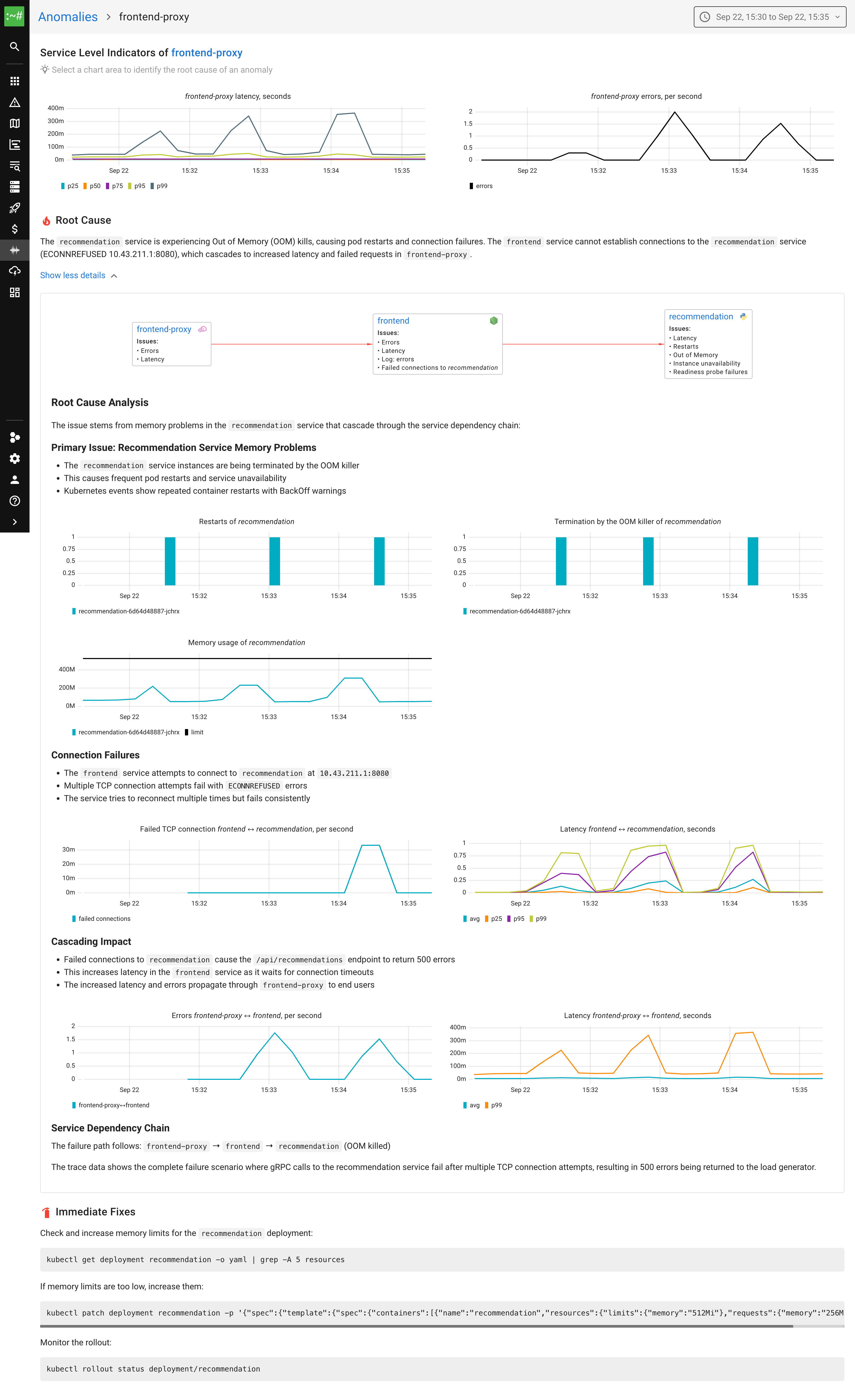

Coroot detected anomalies in the frontend-proxy service, identifying increased latency and error rates affecting user experience.

2. eBPF-based metrics correlation

Using eBPF metrics, Coroot traced the connection failures back to the recommendation service, connecting latency spikes across the entire service dependency chain to identify the source of ECONNREFUSED errors.

3. Understanding the cause

Coroot identified that memory problems in the recommendation service were causing frequent restarts. The analysis revealed OOM killer terminations due to memory exhaustion, with kubernetes events showing repeated container restarts and service unavailability. The cascading impact propagated through frontend → frontend-proxy, causing 500 errors and connection timeouts.

Results

2/3Successfully identified the recommendation service as the source of memory exhaustion and OOM kills

Suggested increasing memory limits, but this doesn't address the root cause - the memory leak needs to be analyzed and fixed in the application code

Provided complete failure scenario analysis showing gRPC calls, connection attempts, and cascading latency through the entire service dependency chain

LLM Usage Details

Based on $3 per 1M input tokens, $15 per 1M output tokens

Try Coroot's AI RCA in your environment

See how Coroot finds root causes for your real production issues with a full enterprise trial.