AI is now a go-to tool for everything from writing to coding. Modern LLMs are so powerful that, with the right prompt and a few adjustments, they can handle tasks almost effortlessly.

At Coroot, we’ve been experimenting with AI for observability. Our goal is to make it useful in the final stage of troubleshooting—when we’ve already identified which service is causing issues, like Postgres, but finding the exact root cause is still tricky due to the many possible scenarios.

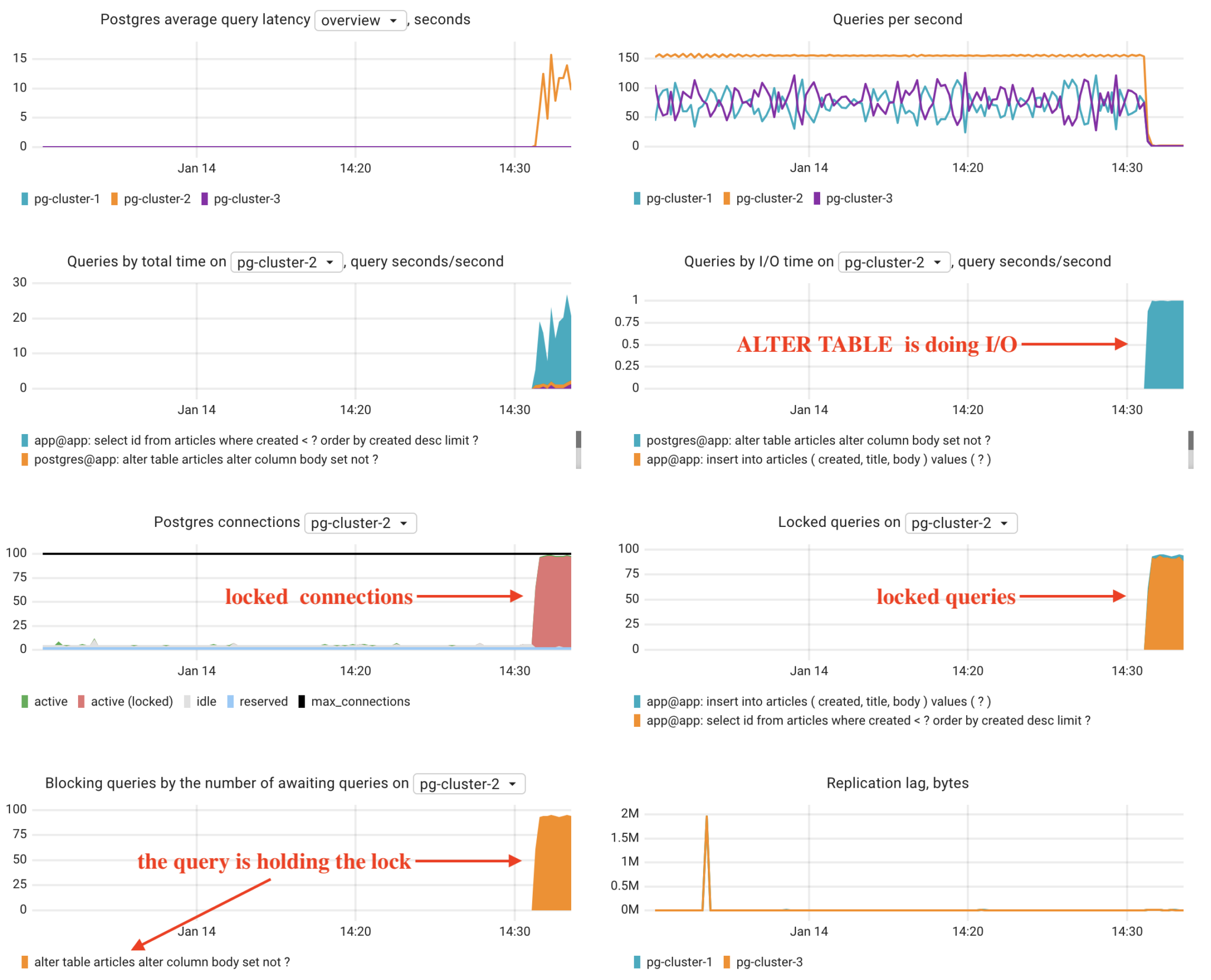

Recently, I was writing a blog post about monitoring a Postgres cluster managed by CloudNativePG. I love using chaos engineering to test our product, so I set up a real Postgres cluster, deployed a client app to generate load, and ran a problematic query:

ALTER TABLE … SET body NOT NULL on a 20GB table.

This query affected performance because it locked the table while checking for null values. Coroot quickly detected the issue and even highlighted the problematic query in its charts.

However, while Coroot provided the data, I still had to explain the charts in my post.

While reviewing the post, my co-founder, Peter Zaitsev, shared this feedback:

“I think this is very good, but it requires too much brainpower and the ability to understand cause and effect in complex systems. The end goal should be for Coroot to provide a specific narrative explanation—just like you did.”

And I completely agree.

Many of our users aren’t experts in areas like databases, network performance, or specific runtimes. We need to provide clear explanations, and ideally, even guidance on how to fix the problem.

So, I decided to test AI again on this specific case. Since laziness often drives progress, I skipped converting my telemetry data into a prompt and instead just uploaded a screenshot as input for the LLMs.

The result shocked me.

Here is my prompt, no other context has been provided:

SELECT pg_terminate_backend(pid) FROM pg_stat_activity WHERE query LIKE '%ALTER TABLE articles alter column body set not null%';

2. Restart Blocked Queries:

Once the ALTER TABLE is canceled, the system should return to normal operations. However, some queries might still be waiting, so check for any lingering locks:

SELECT * FROM pg_stat_activity WHERE wait_event IS NOT NULL;

ALTER TABLE articles ADD CONSTRAINT articles_body_not_null CHECK (body IS NOT NULL) NOT VALID;

Step 2: Backfill missing values (if any).

Step 3: Validate the constraint (still non-blocking):

ALTER TABLE articles VALIDATE CONSTRAINT articles_body_not_null;

Step 4: If no violations exist, alter the column:

ALTER TABLE articles ALTER COLUMN body SET NOT NULL;

pg_repack -t articles --dbname=mydatabase

If running in production, execute schema changes during maintenance windows to reduce impact.

That’s super impressive and surprisingly accurate. What shocked me the most was the model’s ability to interpret the screenshot as input.

We’re integrating AI into Coroot but won’t use screenshots, as it’s much simpler to explain complex system parameters programmatically.

It’s exciting that an LLM can ‘understand’ our domain and generate deep summaries with actionable insights. However, relying on a single vendor didn’t seem like a robust solution.

Fortunately, DeepSeek has emerged as a strong alternative to OpenAI’s model, especially with the recent release of its R1 model. Its open-source nature also gives us the option to self-host if needed, though that is not in our plans for now.

Now, let’s repeat the experiment using DeepSeek-R1:

The answer:

The data indicates a significant performance issue in pg-cluster-2, primarily caused by a long-running ALTER TABLE command on the articles table. This operation is causing table locks, blocking other critical queries (e.g., SELECT and INSERT), leading to:

– High query latency (10-15 seconds).

– A surge in active/locked connections.

– Query throughput drops.

– Replication lag in other clusters (minor).

Root Cause

The ALTER TABLE statement (alter table articles alter column body set not ?) is:

Solutions

-- Find the PID of the ALTER TABLE query

SELECT pid, query FROM pg_stat_activity WHERE query LIKE '%alter column body%';

-- Terminate it

SELECT pg_terminate_backend(<PID>);

Caution: Ensure this won’t corrupt data (e.g., if the ALTER is part of a migration).

Run schema changes during low-traffic periods or use online DDL tools like:

– pg_repack (to rebuild tables without locks).

– CREATE INDEX CONCURRENTLY (for index-related changes).

If the column is being set to NOT NULL, ensure it already contains no NULL values. Pre-run:

UPDATE articles SET body = '' WHERE body IS NULL; -- Example cleanup Long-Term Improvements

Add a lock timeout to prevent indefinite blocking:

SET LOCAL lock_timeout = '5s';

ALTER TABLE ...;

Monitor and Tune Queries:

– Optimize the `SELECT id FROM articles WHERE created < ? …` query with an index on created.

– Batch the INSERT operations to reduce contention.

Connection Pooling:

Use a connection pooler (e.g., PgBouncer) to manage active connections and prevent exhaustion.

Replication Checks:

Investigate replication lag in `pg-cluster-1` and `pg-cluster-3` to ensure failover readiness.

Summary

The blocking ALTER TABLE is the critical issue. Address it first by terminating or rescheduling the operation, then implement safeguards to avoid similar incidents. Optimize queries and use non-locking DDL tools for future schema changes.

DeepSeek-R1 delivers an answer that is quite close to OpenAI’s. I’d say it is accurate enough to be a viable alternative to OpenAI’s models.

Additionally, I tested other open-source models in my lab, but I was only able to run LLMs with up to 70 billion parameters. In this range, I didn’t find anything that came close to OpenAI or the larger DeepSeek models for our needs.

Yes, but only if you have enough data describing your system’s behavior.

As you saw on Coroot’s dashboard, the answer was already there, we just needed to interpret the charts and make a conclusion. The real magic of AI in this case was its ability to understand the bigger picture, apply domain knowledge, and suggest how to fix the issue. This wouldn’t have been possible with just CPU, memory, and disk usage alone.

That’s where Coroot comes in. It covers the full observability cycle, from collecting telemetry data to providing actionable insights, helping you pinpoint root causes in seconds. AI-powered features are on the way.

Ready to give Coroot a try? Get started with the free Coroot Community Edition or explore advanced features with a free trial of Coroot Enterprise Edition.

If you like Coroot, give us a ⭐ on GitHub️.

Any questions or feedback? Reach out to us on Slack.